Have they weaponized hurricanes?

No. At least not successfully. Here I condensed what we know about hurricanes and why they're beyond anybody's ability to control.

After hurricane Helene wreaked havoc through Southeast of the United States, hurricane Milton is about to make landfall on Florida’s Gulf coast, also with catastrophic potential. US Government’s inability or unwillingness to adequately respond to the resulting disasters has unleashed heated discussions on social media, including the speculation that they are somehow deliberately engineering these storms.

The conspiracy theories can’t be dismissed; our government agencies as well as certain oligarchs like Bill Gates have been busy playing God with climate-engineering technologies like cloud-seeding, HAARP (High-Frequency Active Auroral Research Program), AAI (atmospheric aerosol injections), carbon sequestration, sun dimming, and who knows what else. These technologies exist, and they can and do influence the weather. Here’s the former CIA director John Brennan talking about AAI in 2016:

Clearly, they’re busy messing with the climate. At the same time however, I very much doubt that they are capable of controlling anything much. In fact, tropical storms and hurricanes vividly expose the limitations of science and technology at understanding and forecasting the behavior of complex systems. Hurricane forecasts have greatly improved over the last few decades and the 24 and 48 hour forecasts of their trajectories tend to be fairly good. Good however isn’t the same as accurate. But our ability to predict a hurricane’s intensity isn’t even good. If we can’t predict something, how would we even begin to control it?

How hurricanes form

Scientists’ ability to predict a hurricane’s trajectory has improved thanks to our greatly enhanced understanding of how hurricanes form and why they move the way they do. Here’s a condensed account of what we do know about hurricanes. They likely originate from the low and mid-level atmospheric winds blowing from the east across the Ethiopian highlands. As they blow over these high mountains, they form vortices that drift westward. When they reach the Atlantic Ocean, moist monsoon winds from the Gulf of Guinea inject humidity into the vortices. If enough humidity meets a sufficiently strong vortex, masses of clouds begin forming rapidly.

Out of the total of around a hundred such easterly waves, twenty to thirty will move each season across the Atlantic with the potential to turn into tropical depressions given the right conditions – primarily high humidity and heat. As water temperatures off the west coast of Africa warm to their late-summer peak, moist air begins to rise high into the atmosphere adding more energy to the system and providing humidity for continued cloud formation.

For a large storm to form, the following ingredients are required: the ocean’s surface temperature must rise above 26 degrees Celsius (79 degrees Farenheit); the pool of warm water must span at least a few hundred square miles and be at least 60 meters deep (196 feet); a large layer of warm, very humid air must also be present, extending from the ocean’s surface to an altitude of some 5,500 meters (18,000 feet). In such conditions, normally in place from June until December and peaking from August through October, the atmospheric disturbances caused by the easterly waves can trigger the formation of large storms.

One element however, must be absent for a hurricane to form: winds in the atmosphere over the large pool of warm ocean must be mild or consistent from the ocean surface to an altitude of at least 12,000 meters (40,000 feet) because strong and varied winds tend to tear cyclones apart. This is the key reason why hurricanes don’t form in the southern Atlantic.

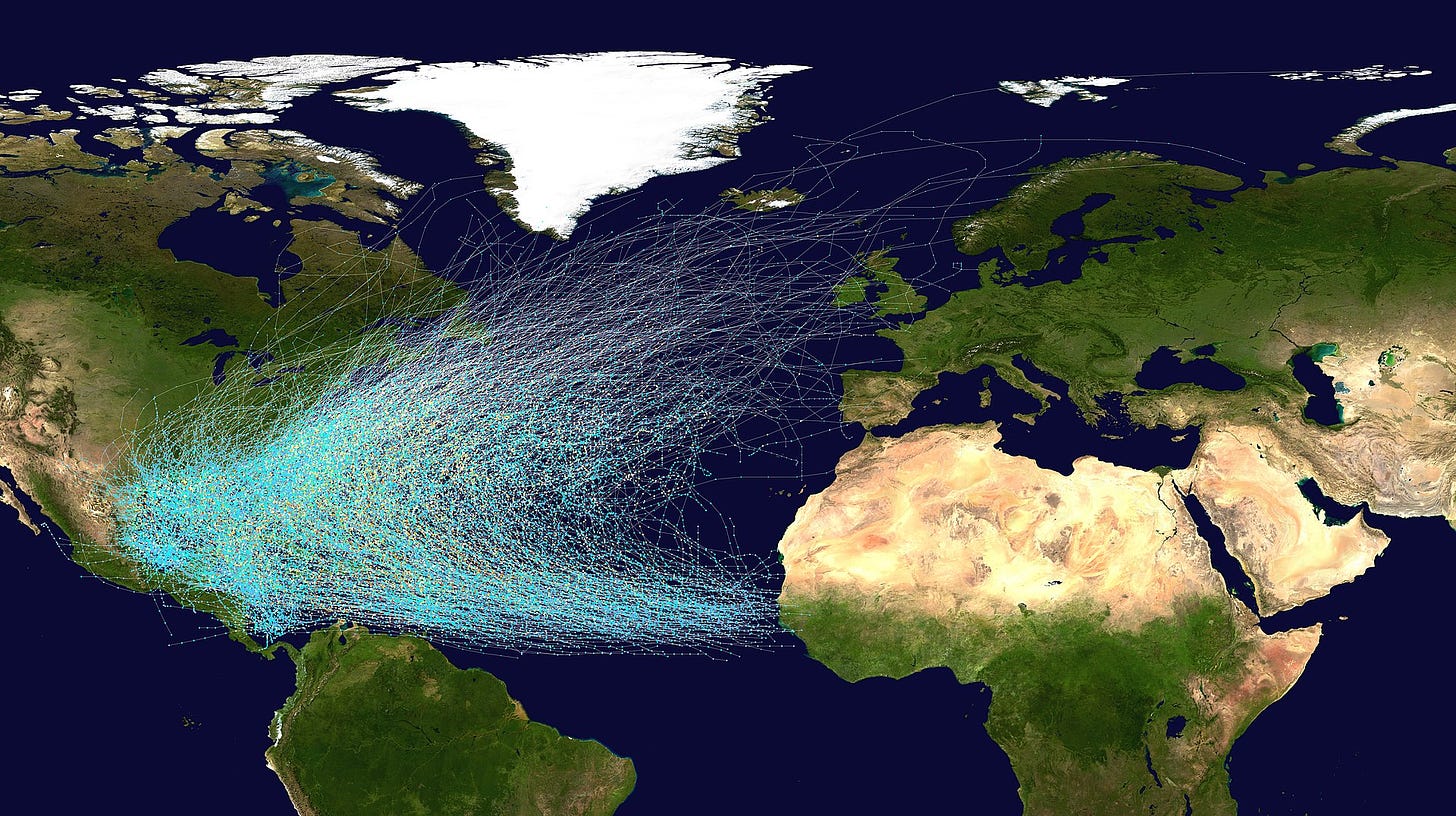

The trajectory of hurricanes is determined in part by Earth’s rotation, so they predictably move from East to West rising gradually in latitude along the way. A large area of high atmospheric pressure usually present around the Bermudas (the Bermuda high) causes the hurricanes to swerve northwards and then continue to move toward the North-East following the Gulf currents. The regularity of these conditions gives hurricanes a somewhat predictable trajectory as the map below shows.

What I presented above is only a very crude summary of a vast and detailed body of knowledge scientists have accumulated about the formation of hurricanes. But in spite of all that knowledge and a great deal of technology enabling storm monitoring in real time, we can never be sure about which disturbances will grow into hurricanes and which ones will dissipate or pass with only minor rainstorms. The structure of hurricanes is largely variable and can change from one day to the next leaving scientists unsure about how exactly all the factors in the atmosphere interact to cause hurricanes to gain or lose strength.

Modelling the storms

Mind numbing advances in computer modelling of the storms have enhanced meteorologists’ ability to work out probable outcomes, but not accurate prediction. Weather forecasting models used by meteorologists today fall in three distinct categories:

statistical models,

dynamical models

hybrid statistical-dynamical models.

Statistical models start with the information such as a storm’s location and the time of the year to make a prediction based on previous observed storms at the same location and at the same time of the year. Such models are based on the assumption that over the next 24 or 48 hours, the present storm will behave similarly to the previous ones.

Dynamical models analyze all the available information about the storm and its adjacent weather conditions and use the basic laws of fluid dynamics in the Earth’s atmosphere to forecast the future development of the storm. Concretely, scientists use six specific mathematical equations to describe the Earth’s atmosphere: three hydrodynamic equations that rely on Newton’s second law of motion to find horizontal and vertical motions of air caused by air pressure differences, gravity, friction, and the Earth’s rotation; two thermodynamic equations that calculate changes in temperature caused by water evaporation, vapor condensation and similar occurrences; and one continuity equation that accounts for the volume of air entering or leaving the area.

Building up the monitoring grid

With the rapid evolution of computer technology, scientists could handle increasing levels of complexity these equations entail. They have advanced by modelling the Earth’s atmosphere as a three-dimensional grid consisting of a number of horizontal data points stacked in a number of atmospheric layers.

One of the first such models was developed in the mid-1950s by the US Weather Bureau. Its grid was rather crude, consisting of a single level of the atmosphere at about 18,000 feet (5,500 meters) and data points spaced 248 miles apart. In the 1970s and 1980s, the Hurricane Center developed a much more complex model consisting of ten layers of atmosphere with grid points 37 miles apart. At the time, computers couldn’t handle this model’s complexity over a large area, so the grid had to move about with the storm, keeping it in the center of an area covering 1,860 miles on each side (it was called the Movable Fine Mesh model).

In the 1990s, the Geophysical Fluid Dynamics Laboratory (GFDL) within the National Oceanic and Atmospheric Administration research center in Princeton, New Jersey developed a model that analyzed data at 18 levels of the atmosphere within three nested grids, the finest of which covered an area of 345 square miles with data points 11.5 miles apart. GFDL’s model thus consisted of some 16,200 points receiving data in time steps of 15 seconds. Even at such fine resolution, the model could only represent a hurricane with an idealized vortex structure based upon only a handful of parameters of the real storm (maximum winds and the distance of maximum winds from the storm center).

In parallel, the US Navy developed its own, somewhat less detailed model named Navy Operational Global Atmospheric Prediction System (NOGAPS). NOGAPS consisted of a horizontal grid resolution of about 52 miles with 45 data points in each layer over an area covering 345 square miles. Running on the Cray C90 supercomputer, NOGAPS took about 20 minutes to produce a 24-hour forecast. If the NOGAPS model were run with the finer grid of the GFDL model, it would require about a week’s time to produce a 24-hour forecast.

Since the late 1990s, further advances have taken place both in increased model resolution,[1] speed of computation and processing capacity. Nevertheless, the practical reality of the problem seems to be converging upon the theory (of computation): as computer models of storms have gone from cruder to finer grid resolution, their complexity has increased exponentially requiring exponentially greater computing power to produce timely forecasts. A visualization below was generated with data for the hurricane Doublas (July 2020) from NOAA’s Weather and Climate Toolkit:

The a mount of data that’s collected is impressive, but the resolution is still rather grainy. Nonetheless, the fatal flaw of computer modelling is not only in the resolution of models or in the processing speeds of computers.

The brick wall of complexity

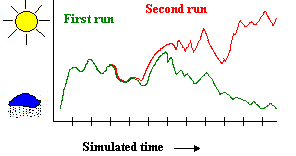

An impossible problem also lies in the complex models’ sensitivity to input data. Namely, very small differences in the values of initial variables can lead to very large variations in outcomes. The seemingly insurmountable problem in modelling complex systems was discovered by MIT’s theoretical meteorologist Edward Lorenz. Lorenz developed a relatively straightforward computer model emulating the weather.

One day in 1961, Lorenz resolved to rerun the results of one particular simulation starting at the half-way point, using the results he had for that particular point in his print-outs. The new simulation quickly started diverging from the original results and soon bore no resemblance to it.

The ultimate explanation for this divergence had profound implications for science: while Lorenz’s program took its calculations to six decimal places, his print-outs only showed the values to three decimal places. The minute difference between say, 1.234567 and 1.235 applied in the second simulation led to very large differences in the final results. Lorenz termed this phenomenon, “sensitive dependence on initial conditions.”

We have every reason to expect that other complex systems will display a similar sensitivity, implying that the problem of accuracy of measurements poses another stumbling block in science’s attempt to get to the bottom of such systems. Indeed, accurate prediction will likely remain unattainable regardless of advances in all areas of research. As Bob Sheets, the former director of the National Hurricane Center in Miami put it:

“The grid for the computer models does keep getting smaller and smaller, but we’re still taking in terms of miles, while the actual weather is taking place at the level of molecules.” [2]

Hurricanes, like all other complex systems will continue to defy prediction. Simply, our efforts to understand and predict a system’s behaviour are up against a brick wall of complexity. Beyond the very near-term, the whole business of forecasting amounts to educated guesswork.

“They”: cruel, sadistic and stupid juveniles

To suppose that anyone would actually be capable of controlling such systems and achieving desired outcomes is to credit them with omniscience and omnipotence that’s beyond the human grasp. It takes a particular mental disposition to venture and play God with our societies, economies, viruses, earthquakes or weather modification technologies.

Based on what we’ve seen so far, the people who are inclined to do so have much more in common with cruel, sadistic and stupid juveniles than they do with learned, mature and responsible scientists. They can set things on fire and destroy life, but they wouldn’t know how to create or control something if their degenerate lives depended on it. They are good at one thing only: failing.

Recall, on 23 July this year, the World Health Organization declared the Mpox pandemic a public health emergency of international concern. That should have snapped the world into another “new normal,” but the world shrugged, yawned and moved on. They are not omnipotent and they are light years away from being omniscient. They’re losing and we are winning for we have the truth on our side. Slowly but surely we are prevailing in the struggle for truth and we’re doing it every day.

The above article was adapted from my 2016 book “Mastering Uncertainty in Commodities Trading” which was rated #1 book on FinancialExpert.co.uk list of “The 5 Best Commodities Books for Investors and Traders” for 2021 and 2022. You can download it for free at the link above. A more recent title which covers much of the same curriculum is “Alex Krainer’s Trend Following Bible.” It has not won any awards, but I do believe that it is a better text nevertheless.

Alex Krainer – @NakedHedgie is the creator of I-System Trend Following and publisher of daily TrendCompass investor reports which cover over 200 financial and commodities markets. One-month test drive is always free of charge, no jumping through hoops to cancel. To start your trial subscription, drop us an email at TrendCompass@ISystem-TF.com

For US investors, we propose a trend-driven inflation/recession resilient portfolio covering a basket of 30+ financial and commodities markets. Further information is at link.

"If we can’t predict something, how would we even begin to control it?"

Economists have no problem mustering up this chutzpah.

Where did "Alexa" get THIS IDEA? Alexa Calmly Tells Stunned Americans Hurricane Helene Was Artificially Created, By Cloud Seeding, Celia Farber

"It Was Also The First Manmade Hurricane In History." —ALEXA https://celiafarber.substack.com/p/alexa-calmly-tells-stunned-americans

Celia Farber, Catherine Austin Fitts On Helene: "It's Not A Natural Event." Says It Is A Giant Taking, Land Grab, WH Leveraging Disaster Recovery Against Election: "Assertion Of Top Down Control Of Counties"

"The goal of this operation and [Hurricane Helene] is an operation, it's not a natural event...[it] got steered in my opinion," Fitts says. "The goal of this operation is to take assets and...it's basically a giant taking...to grab land."

The investment banker says:

"We're having lots of reports of land grab tactics being applied" and adds that the operation "relates to the [U.S. presidential] election."

"I suspect we're seeing the White House basically—and, again, this is conjecture—negotiate terms. You know, you'll get disaster recovery if, you know, you do this, this, and this for the election," Fitts says.

"The president is saying he's gonna call back Congress, that there's not enough money at FEMA to go through the hurricane season, and so they're strong-arming Congress to come back and basically give the administration what they want on the continuing appropriations process."

Furthermore, Fitts says this is also an instance of central bankers attempting to wrangle more control for themselves. "This is all part of installing the digital ID and financial transaction infrastructure you need to assert absolute control," Fitts says. "And if you look at the patterns, whether it's the taking or the land grab or other other reports we're getting out of that area, it looks to me like a lot of assertion of top-down control of counties." https://celiafarber.substack.com/p/catherine-austin-fitts-on-helene

Dane Wigington, in 90 second time lapse video with artificial color for ground-based radio-frequency transmissions, proportional to output-power, shows that these steering transmissions were everywhere that the Hurricane Helene was not, leaving an open channel of travel. It had been heavily seeded with charged nanoparticles while it was forming, which makes it more subject to radio-frequency steering. Hurricane Helene And Frequency Transmissions, 90 Second Alert https://www.geoengineeringwatch.org/hurricane-helene-and-frequency-transmissions-90-second-alert/